SOC 2 Compliance for AI-as-a-Service (AaaS) in 2026: Automating Controls and AI Risk Oversight

AI doesn’t stand still. Models evolve, data pipelines change, and outputs adapt.

In 2026, SOC 2 for AI-as-a-Service is no longer just about security. It’s about AI risk oversight.

For AaaS providers (ML platforms, AI APIs, model hosting), buyers want two things:

security proof and trust in how AI behaves.

Quick snapshot: SOC 2 for AaaS in 2026

| Old view | New view | Winning strategy |

|---|---|---|

| SOC 2 = IT controls | SOC 2 = Security + Privacy + AI governance | Automation + continuous oversight |

| Point-in-time effort | Controls must operate over time | Evidence collected continuously |

| “We’re secure” | “We can prove it—and explain AI changes” | Clear ownership + auditable pipelines |

Welcome to the new SOC 2 reality for AI companies

In 2026, customers aren’t only asking, “Is our data secure?”

They’re also asking:

- Can we trust your models?

- How do you prevent misuse or bias?

- Who can change models and when?

- Can you prove how decisions are made?

Why AaaS companies are under a microscope

AI-as-a-Service providers sit in a high-risk position. You often control:

- Training data

- Models and weights

- Inference pipelines

- Customer inputs and outputs

A single flaw can scale instantly across customers. That’s why AI trust is now a buying criterion.

SOC 2 and the rise of AI risk oversight

SOC 2 expectations for AI are showing up through how auditors interpret existing Trust Services Criteria.

For AaaS, that typically means deeper scrutiny around:

- Change management for models (review, approval, rollback)

- Access controls for training data and model registries

- Monitoring of model behavior (drift, anomalies, misuse patterns)

- Privacy controls around prompts, logs, and outputs

The new AI risks SOC 2 auditors care about

For AaaS companies, SOC 2 audits increasingly surface questions in four areas:

Model integrity

- Who can deploy or update models?

- Are changes reviewed and approved?

Data governance

- What data is used for training?

- How is PII protected or excluded?

Algorithmic risk

- How do you detect bias or drift?

- Are outputs monitored for anomalies?

Privacy in AI

- Can personal data leak through outputs?

- Are prompts and responses logged securely?

If you can’t answer these confidently, trust breaks down fast especially in enterprise sales cycles.

Why manual compliance can’t keep up with AI

AI environments change daily. Manual compliance assumes stability. That gap creates risk.

- Spreadsheets don’t notice model updates

- Folders don’t capture pipeline changes

- People miss access drift when teams move fast

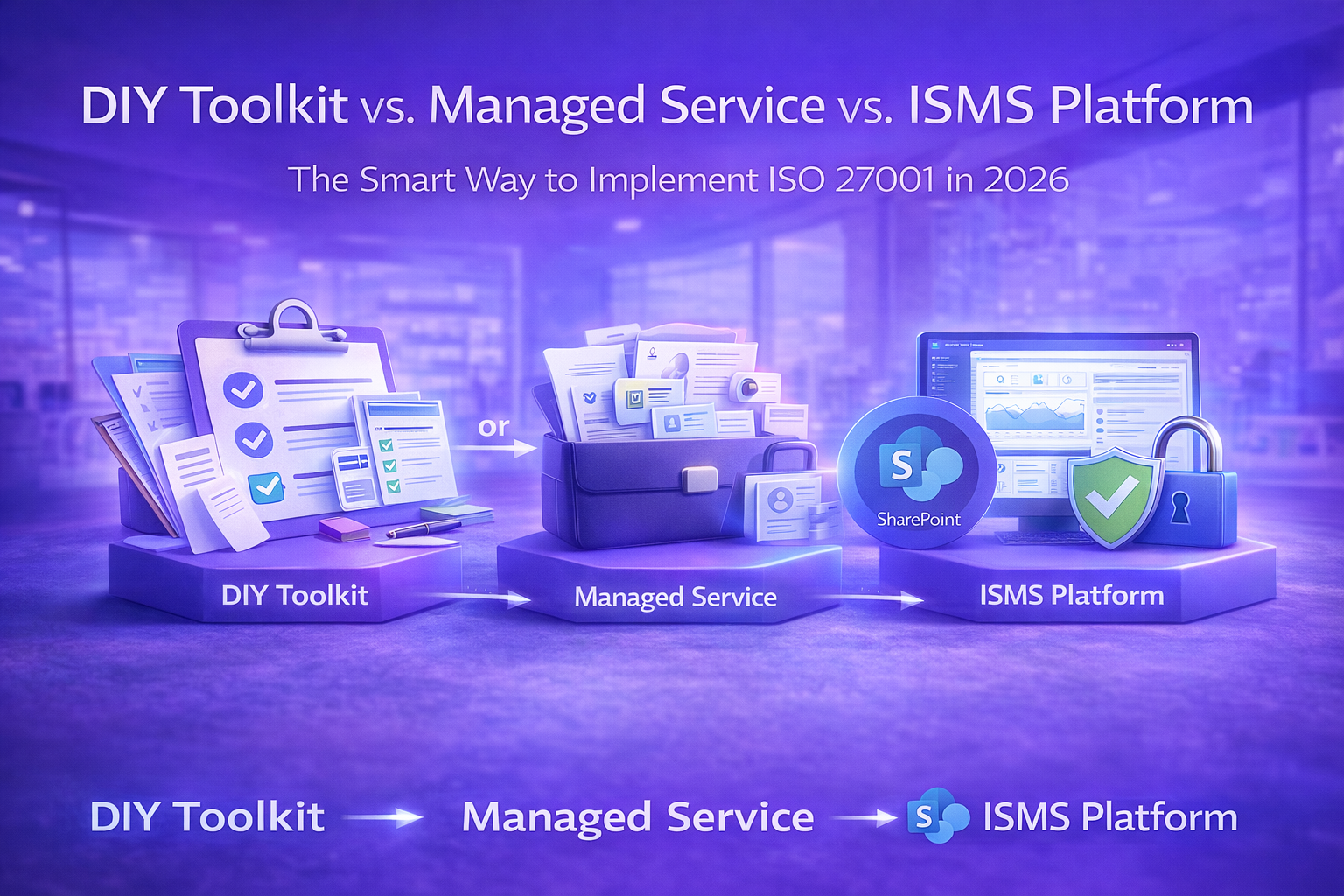

Automation: the only scalable answer

For AaaS providers, continuous compliance automation is no longer optional.

Automation enables:

- Ongoing evidence collection

- Real-time visibility into control status

- Monitoring as models evolve

- Reduced audit friction and fewer fire drills

Building or selling AI-as-a-Service?

Prepare for SOC 2 with AI risk oversight in mind without slowing engineering.

Security and privacy by design in AI development

The strongest AaaS companies bake controls into their pipelines. This typically includes:

- Secure model registries and protected artifacts

- Controlled access for data scientists and engineers

- Logged model changes with approvals and rollback paths

- Clear separation of dev / staging / production

- Privacy-aware data handling for prompts, logs, and outputs

Continuous monitoring as models learn

SOC 2 Type II requires controls to operate over time.

Automation helps AaaS teams stay audit-ready even during rapid iteration by:

| What changes fast | What automation should capture |

|---|---|

| Model versions and deployments | Approvals, change tickets, deployment logs, rollback evidence |

| Access and privileged actions | Access reviews, admin actions, audit trails, exception handling |

| Data and output risk | Monitoring, anomaly alerts, privacy checks, retention rules |

Tired of compliance slowing down AI innovation?

Adopt continuous SOC 2 compliance for AI services and keep delivery moving.

How Canadian Cyber helps AaaS companies win trust

Canadian Cyber understands both sides of the equation: fast AI delivery and rigorous assurance.

We help AI companies by:

- Designing SOC 2 programs that fit AaaS model lifecycles

- Addressing AI-specific risk within SOC 2 controls

- Implementing compliance automation and evidence workflows

- Providing ongoing vCISO oversight and audit readiness support

The trust economy of 2026

In 2026, AI buyers won’t just compare features. They’ll compare governance.

SOC 2 compliance with real AI risk oversight separates trusted platforms from risky experiments.

Final thought

AI learns fast. Compliance must keep up.

For AI-as-a-Service providers, SOC 2 in 2026 is about continuous oversight, automation, and responsible governance.

Make SOC 2 a strength for your AI platform

Partner with Canadian Cyber for AaaS SOC 2 readiness, automation, and AI risk oversight.

Stay Connected With Canadian Cyber

Follow us for practical insights on AI security, SOC 2, and compliance automation: